The general Neural Network can only use independent data, it do not have the “memory”.

The first approach is to add a recurse in net. The output will still contain the information of the input. This called Recursive Neural Network (RNN), but it always leads to a Gradient Vanishing.

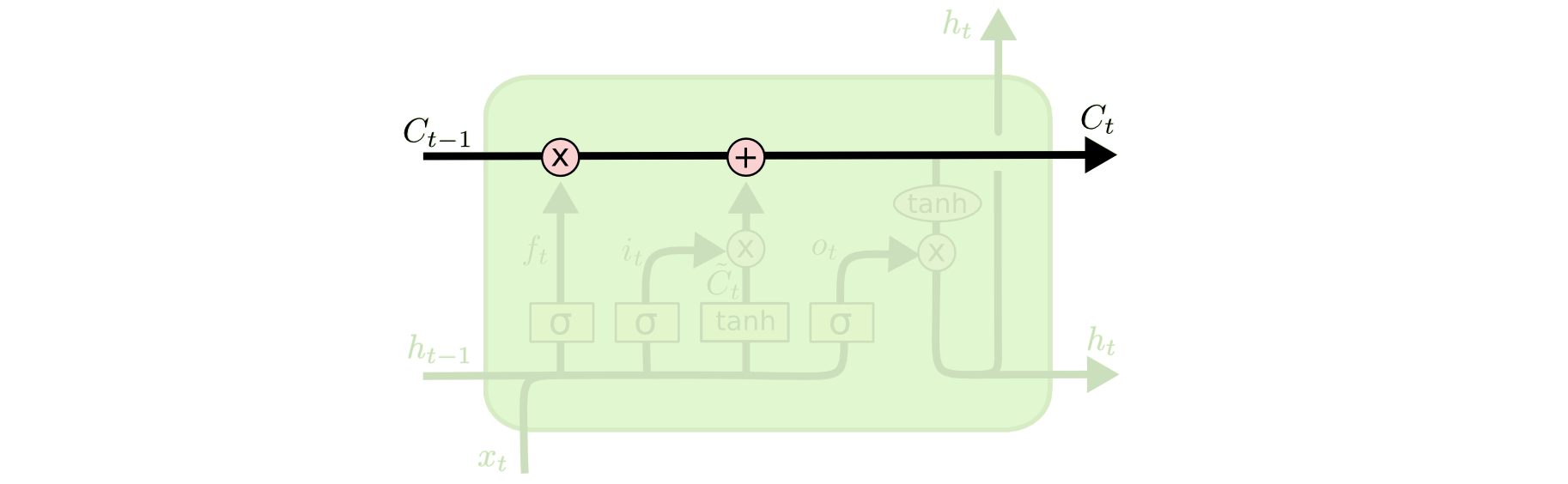

Here and is the Cell state to let information pass through with unchanged.

But for RNN, learning long-term dependencies with Gradient Decent is hard: http://ai.dinfo.unifi.it/paolo//ps/tnn-94-gradient.pdf

Then LSTM is designed as a special RNN and has 3 gate to control the cell state. It has 输入门,遗忘门,输出门.

Forget Gate

- is the output of the last cell(the whole green box above).

- is the input of the current cell

- is the sigmod function: result is between 0 and 1, 0 means all forget and 1 means keep all.

- is the result of the sigmoid function.

Input Gate

Sigmoid decide which information need to be update. is the result of the sigmoid, so we can use to be the scaled value which means how much we decided to update each state value. Similarly, is how much we want to keep from the previous state.

Tanh layer is to update the old information.

- is the Cell information or Cell state. and has been updated to

- Then we use as our new

Output Gate

Based on our Cell information, we get the output or the for the next Cell.

- is caculated by the sigmoid layer

- output is computed by times Cell information pass through the tanh layer (-1 to 1)

LSTM Transformation

Gated Recurrent Unit: reset gate and update gate